在导入数据的时候,服务器突然无反应,连接不上,基本上就判定死掉了,重启服务器后查看日志如下:

Mar 26 08:13:01 localhost kernel: INFO: task flush-8:0:26079 blocked for more than 120 seconds.

Mar 26 08:13:01 localhost kernel: Tainted: P --------------- 2.6.32-431.el6.x86_64 #1

Mar 26 08:13:01 localhost kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Mar 26 08:13:01 localhost kernel: flush-8:0 D 0000000000000001 0 26079 2 0x00000080

Mar 26 08:13:01 localhost kernel: ffff8804359118c0 0000000000000046 0000000000000000 ffff880436a5cb18

Mar 26 08:13:01 localhost kernel: ffff880435911860 ffffffff81068a53 ffffffffa0109300 ffff880436a5cb18

Mar 26 08:13:01 localhost kernel: ffff880436a5d098 ffff880435911fd8 000000000000fbc8 ffff880436a5d098

Mar 26 08:13:01 localhost kernel: Call Trace:

Mar 26 08:13:01 localhost kernel: [<ffffffff81068a53>] ? dequeue_entity+0x113/0x2e0

Mar 26 08:13:01 localhost kernel: [<ffffffffa0109300>] ? noalloc_get_block_write+0x0/0x60 [ext4]

Mar 26 08:13:01 localhost kernel: [<ffffffff8111f930>] ? sync_page+0x0/0x50

Mar 26 08:13:01 localhost kernel: [<ffffffff815280a3>] io_schedule+0x73/0xc0

Mar 26 08:13:01 localhost kernel: [<ffffffff8111f96d>] sync_page+0x3d/0x50

Mar 26 08:13:01 localhost kernel: [<ffffffff8152893a>] __wait_on_bit_lock+0x5a/0xc0

Mar 26 08:13:01 localhost kernel: [<ffffffff8111f907>] __lock_page+0x67/0x70

Mar 26 08:13:01 localhost kernel: [<ffffffff8109b320>] ? wake_bit_function+0x0/0x50

Mar 26 08:13:01 localhost kernel: [<ffffffff81135bf5>] ? pagevec_lookup_tag+0x25/0x40

Mar 26 08:13:01 localhost kernel: [<ffffffffa010db8f>] write_cache_pages_da+0x44f/0x470 [ext4]

Mar 26 08:13:01 localhost kernel: [<ffffffffa00df495>] ? jbd2_journal_start+0xb5/0x100 [jbd2]

Mar 26 08:13:01 localhost kernel: [<ffffffffa010de82>] ext4_da_writepages+0x2d2/0x620 [ext4]

Mar 26 08:13:01 localhost kernel: [<ffffffff81134c91>] do_writepages+0x21/0x40

Mar 26 08:13:01 localhost kernel: [<ffffffff811b4ffd>] writeback_single_inode+0xdd/0x290

Mar 26 08:13:01 localhost kernel: [<ffffffff811b53fd>] writeback_sb_inodes+0xbd/0x170

Mar 26 08:13:01 localhost kernel: [<ffffffff811b555b>] writeback_inodes_wb+0xab/0x1b0

Mar 26 08:13:01 localhost kernel: [<ffffffff811b5953>] wb_writeback+0x2f3/0x410

Mar 26 08:13:01 localhost kernel: [<ffffffff81527910>] ? thread_return+0x4e/0x76e

Mar 26 08:13:01 localhost kernel: [<ffffffff81084db2>] ? del_timer_sync+0x22/0x30

Mar 26 08:13:01 localhost kernel: [<ffffffff811b5c15>] wb_do_writeback+0x1a5/0x240

Mar 26 08:13:01 localhost kernel: [<ffffffff811b5d13>] bdi_writeback_task+0x63/0x1b0

Mar 26 08:13:01 localhost kernel: [<ffffffff8109b127>] ? bit_waitqueue+0x17/0xd0

Mar 26 08:13:01 localhost kernel: [<ffffffff81143990>] ? bdi_start_fn+0x0/0x100

Mar 26 08:13:01 localhost kernel: [<ffffffff81143a16>] bdi_start_fn+0x86/0x100

Mar 26 08:13:01 localhost kernel: [<ffffffff81143990>] ? bdi_start_fn+0x0/0x100

Mar 26 08:13:01 localhost kernel: [<ffffffff8109aef6>] kthread+0x96/0xa0

Mar 26 08:13:01 localhost kernel: [<ffffffff8100c20a>] child_rip+0xa/0x20

Mar 26 08:13:01 localhost kernel: [<ffffffff8109ae60>] ? kthread+0x0/0xa0

Mar 26 08:13:01 localhost kernel: [<ffffffff8100c200>] ? child_rip+0x0/0x20

This is a know bug. By default Linux uses up to 40% of the available memory for file system caching. After this mark has been reached the file system flushes all outstanding data to disk causing all following IOs going synchronous. For flushing out this data to disk this there is a time limit of 120 seconds by default. In the case here the IO subsystem is not fast enough to flush the data withing 120 seconds. This especially happens on systems with a lof of memory.

The problem is solved in later kernels and there is not “fix” from Oracle. I fixed this by lowering the mark for flushing the cache from 40% to 10% by setting “vm.dirty_ratio=10″ in /etc/sysctl.conf. This setting does not influence overall database performance since you hopefully use Direct IO and bypass the file system cache completely.

linux会设置40%的可用内存用来做系统cache,当flush数据时这40%内存中的数据由于和IO同步问题导致超时(120s),所将40%减小到10%,避免超时。

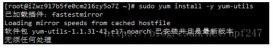

修改内核参数:

# vim /etc/sysctrl.conf vm.dirty_background_ratio = 5 vm.dirty_ratio = 10

参见:http://www.blackmoreops.com/2014/09/22/linux-kernel-panic-issue-fix-hung_task_timeout_secs-blocked-120-seconds-problem/